Create Chat Interfaces#

Both Streamlit and Panel provides special components to help you build conversational apps.

Streamlit |

Panel |

Description |

|---|---|---|

Display a chat message |

||

Input a chat message |

||

Display the output of long-running tasks in a container |

||

Display multiple chat messages |

||

High-level, easy to use chat interface |

||

Display the thoughts and actions of a LangChain agent |

||

Persist the memory of a LangChain agent |

The starting point for most Panel users is the high-level ChatInterface or PanelCallbackHandler, not the low-level ChatMessage and ChatFeed components.

Chat Message#

Lets see how-to migrate an app that is using st.chat_message.

Streamlit Chat Message Example#

import streamlit as st

with st.chat_message("user"):

st.image("https://streamlit.io/images/brand/streamlit-logo-primary-colormark-darktext.png")

st.write("# A faster way to build and share data apps")

Panel Chat Message Example#

import panel as pn

pn.extension(design="material")

message = pn.Column(

"https://panel.holoviz.org/_images/logo_horizontal_light_theme.png",

"# The powerful data exploration & web app framework for Python"

)

pn.chat.ChatMessage(message, user="user").servable()

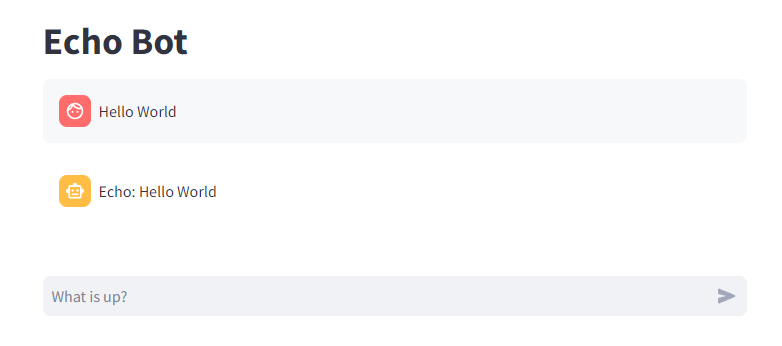

Echo Bot#

Lets see how to migrate a bot that echoes the user input.

Streamlit Echo Bot#

import streamlit as st

def echo(prompt):

return f"Echo: {prompt}"

st.title("Echo Bot")

if "messages" not in st.session_state:

st.session_state.messages = []

for message in st.session_state.messages:

with st.chat_message(message["role"]):

st.markdown(message["content"])

if prompt := st.chat_input("What is up?"):

st.chat_message("user").markdown(prompt)

st.session_state.messages.append({"role": "user", "content": prompt})

response = echo(prompt)

with st.chat_message("assistant"):

st.markdown(response)

st.session_state.messages.append({"role": "assistant", "content": response})

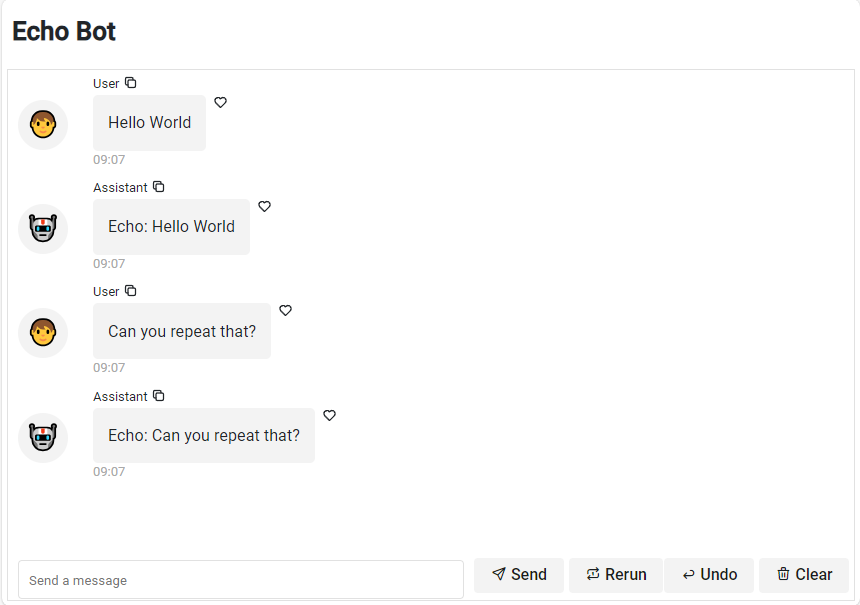

Panel Echo Bot#

import panel as pn

pn.extension(design="material")

def echo(contents, user, instance):

return f"Echo: {contents}"

chat_interface = pn.chat.ChatInterface(

callback=echo,

)

pn.Column(

"# Echo Bot",

chat_interface,

).servable()

Search Agent with Chain of thought#

Lets try to migrate an agent that uses the Duck Duck Go search tool and shows its chain of thought.

Streamlit Search Agent with Chain of thought#

from langchain.llms import OpenAI

from langchain.agents import AgentType, initialize_agent, load_tools

from langchain.callbacks import StreamlitCallbackHandler

import streamlit as st

llm = OpenAI(temperature=0, streaming=True)

tools = load_tools(["ddg-search"])

agent = initialize_agent(

tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True

)

if prompt := st.chat_input():

st.chat_message("user").write(prompt)

with st.chat_message("assistant"):

st_callback = StreamlitCallbackHandler(st.container())

response = agent.run(prompt, callbacks=[st_callback])

st.write(response)

Panel Search Agent with Chain of thought#

from langchain.llms import OpenAI

from langchain.agents import AgentType, initialize_agent, load_tools

import panel as pn

pn.extension(design="material")

llm = OpenAI(temperature=0, streaming=True)

tools = load_tools(["ddg-search"])

agent = initialize_agent(

tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True,

)

async def callback(contents, user, instance):

callback_handler = pn.chat.langchain.PanelCallbackHandler(instance)

await agent.arun(contents, callbacks=[callback_handler])

pn.chat.ChatInterface(callback=callback).servable()

More Panel Chat Examples#

For more inspiration check out panel-chat-examples.